IAM role authentication to Databricks AWS staging buckets

Authenticating with IAM Roles

See Using AWS IAM roles to access S3 buckets for detailed documentation on configuring Polytomic connections with IAM roles.

When using Databricks on AWS with IAM role authentication, you need to configure both Polytomic and Databricks to access your S3 staging bucket. This requires setting up an IAM role with a trust policy that allows both services to assume the role.

Configuring Databricks Storage Credential

When Polytomic stages data in S3, Databricks needs its own credentials to read that data. This is done through a Storage Credential in Unity Catalog.

When You Need a Storage Credential

You need to configure a Storage Credential if your staging bucket is not configured as an External Location in Databricks. If you need a Storage Credential, you'll provide its name to Polytomic in the connection configuration.

Creating the Storage Credential

- In your Databricks workspace, navigate to Catalog Explorer.

- Click the External Data button, then go to the Credentials tab.

- Click Create credential.

- Select AWS IAM Role as the credential type.

- Enter a name for the credential (e.g.,

polytomic-staging-credential). - Enter the same IAM Role ARN that you configured in Polytomic.

- Click Create.

- Copy the External ID that Databricks generates - you'll need this for the trust policy.

For detailed instructions, see the Databricks documentation on creating storage credentials.

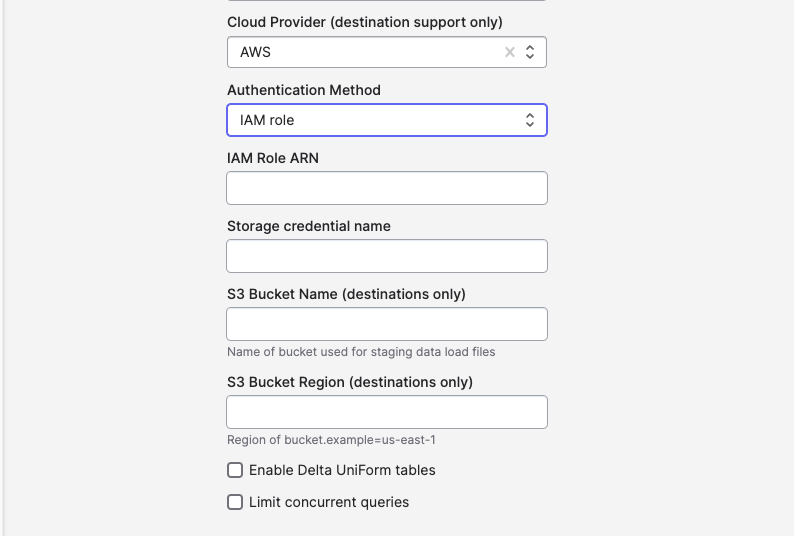

Configuring Polytomic

In your Databricks connection configuration:

- Switch the Authentication Method dropdown to IAM role.

- Enter the IAM Role ARN that both Polytomic and Databricks will use.

- Enter the Storage Credential name you created in Databricks, in applicable.

- Click Save.

After saving, Polytomic will generate an External ID for your connection. You'll need this External ID when configuring the IAM role trust policy.

Configuring the IAM Role

S3 Permissions Policy

The IAM role needs the following permissions for the S3 staging bucket:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PolytomicAndDatabricksS3Access",

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:ListBucket",

"s3:PutObject",

"s3:DeleteObject",

"s3:GetBucketLocation",

"s3:ListBucketMultipartUploads",

"s3:ListMultipartUploadParts",

"s3:AbortMultipartUpload"

],

"Resource": [

"arn:aws:s3:::your-staging-bucket/*",

"arn:aws:s3:::your-staging-bucket"

]

},

{

"Sid": "AllowSelfAssume",

"Effect": "Allow",

"Action": "sts:AssumeRole",

"Resource": "arn:aws:iam::<YOUR-AWS-ACCOUNT-ID>:role/<YOUR-ROLE-NAME>"

}

]

}

Replace:

your-staging-bucketwith your S3 bucket name<YOUR-AWS-ACCOUNT-ID>with your AWS account ID<YOUR-ROLE-NAME>with the name of this IAM role

Note: The self-assume statement is required by Databricks for storage credentials. For more information, see the AWS blog post on IAM role trust policy updates.

Trust Policy for Dual Access

The trust policy must allow both Polytomic and Databricks to assume the role. This requires including both services' AWS principals and both External IDs:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::568237466542:root",

"arn:aws:iam::414351767826:role/unity-catalog-prod-UCMasterRole-14S5ZJVKOTYTL",

"arn:aws:iam::<YOUR-AWS-ACCOUNT-ID>:role/<YOUR-ROLE-NAME>"

]

},

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": {

"sts:ExternalId": [

"<POLYTOMIC-EXTERNAL-ID>",

"<DATABRICKS-EXTERNAL-ID>"

]

}

}

}

]

}

Replace:

<YOUR-AWS-ACCOUNT-ID>with your AWS account ID<YOUR-ROLE-NAME>with the name of this IAM role (for self-assuming)<POLYTOMIC-EXTERNAL-ID>with the External ID from your Polytomic connection<DATABRICKS-EXTERNAL-ID>with the External ID from your Databricks storage credential

Principal explanations:

arn:aws:iam::568237466542:root- Polytomic's AWS accountarn:aws:iam::414351767826:role/unity-catalog-prod-UCMasterRole-14S5ZJVKOTYTL- Databricks Unity Catalog's AWS rolearn:aws:iam::<YOUR-AWS-ACCOUNT-ID>:role/<YOUR-ROLE-NAME>- Self-assuming capability (required by Databricks)

See the Databricks documentation for the correct service principal for GovCloud.

Alternative: More Restrictive Polytomic Principal

For additional security, you can restrict Polytomic's access to use a specific role instead of the root account:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::568237466542:role/convox/prod-polytomic-ServiceRole-1ELGH39L0GCHT",

"arn:aws:iam::414351767826:role/unity-catalog-prod-UCMasterRole-14S5ZJVKOTYTL",

"arn:aws:iam::<YOUR-AWS-ACCOUNT-ID>:role/<YOUR-ROLE-NAME>"

]

},

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": {

"sts:ExternalId": [

"<POLYTOMIC-EXTERNAL-ID>",

"<DATABRICKS-EXTERNAL-ID>"

]

}

}

}

]

}

Troubleshooting

Storage Credential Validation Fails

If Databricks reports that the storage credential validation failed:

- Ensure the IAM role trust policy includes the Databricks Unity Catalog principal

- Verify the External ID matches what Databricks generated

- Confirm the self-assuming statement is present in both the trust policy and permissions policy

Polytomic Cannot Assume Role

If Polytomic cannot connect:

- Verify the Polytomic principal is in the trust policy

- Ensure the External ID from Polytomic is included

- Check that the IAM role has the required S3 permissions

"Non-self-assuming role" Error

As of January 2025, Databricks blocks storage credentials that don't use self-assuming IAM roles. Ensure your trust policy includes the self-assuming principal: arn:aws:iam::<YOUR-AWS-ACCOUNT-ID>:role/<YOUR-ROLE-NAME>

Additional Resources

Updated 4 months ago