Redshift Serverless

Source

Redshift Serverless may use either the Redshift Data API or a VPC endpoint to access data stored in Redshift. Unlike our standard Redshift connector, Serverless utilizes IAM roles for authorization.

Syncing from Redshift Serverless

The role should should have permission for the following IAM actions :

redshift-data:CancelStatementredshift-data:DescribeStatementredshift-data:DescribeTableredshift-data:ExecuteStatementredshift-data:GetStatementResultredshift-data:ListDatabasesredshift-data:ListSchemasredshift-data:ListTablesredshift-serverless:GetCredentials

As an example, this permission policy grants the necessary permissions for all Redshift Serverless workgroups in the account:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"redshift-data:CancelStatement",

"redshift-data:DescribeStatement"

"redshift-data:DescribeTable",

"redshift-data:ExecuteStatement",

"redshift-data:GetStatementResult",

"redshift-data:ListDatabases",

"redshift-data:ListSchemas",

"redshift-data:ListTables",

"redshift-serverless:GetCredentials",

],

"Resource": "*"

}

]

}

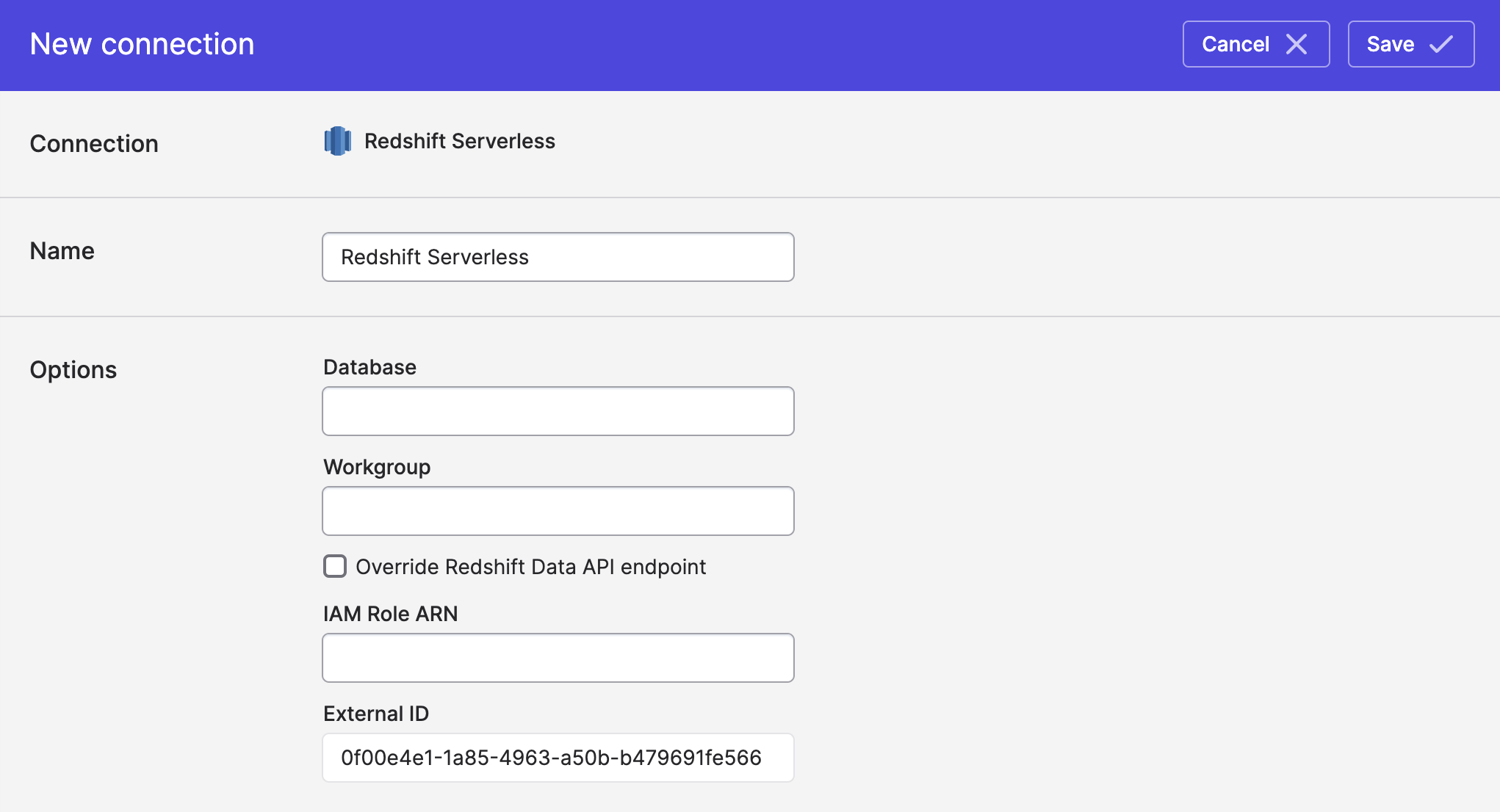

The role's trust policy must be configured to allow Polytomic (AWS Account ID 568237466542) to assume the role. An external identifier is displayed when the connection is created, which may be used to further limit access to the role.

As an example, your trust policy will look something like the following:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::568237466542:root"

},

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": {

"sts:ExternalId": "a1efa791-4530-43a0-962d-74e2ccf18309"

}

}

}

]

}

The value for sts:ExternalId will be unique to your Polytomic organization and displayed when creating the connection.

Using Redshift Serverless as a Bulk Sync source

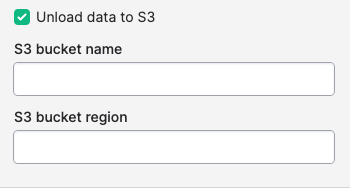

Redshift Serverless connections can be used as the source for a bulk sync (i.e. an ELT workload into a data warehouse or cloud storage bucket). If you have an S3 bucket available, Polytomic can use UNLOAD to extract data from Redshift, which may be more efficient.

To configure Polytomic to use UNLOAD, check "Unload data to S3" in the connection configuration and enter the bucket name and region.

The IAM Policy must have permission to read, write, and list objects in the bucket.

Authenticating with IAM Roles

See Using AWS IAM roles to access S3 buckets for detailed documentation on configuring Polytomic connections with IAM roles.

Redshift must also be able to assume the role in order to perform the unload operation. An example trust policy should look something like the following when using UNLOAD:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::568237466542:root"

},

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": {

"sts:ExternalId": "a1efa791-4530-43a0-962d-74e2ccf18309"

}

}

},

{

"Effect": "Allow",

"Principal": {

"Service": "redshift.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

Syncing to Redshift Serverless

If you wish to write to Redshift, there are three requirements:

- A Redshift role with write permission to the table, schemas, or database that you want to load data into.

- An S3 bucket in the same region as your Redshift server. Polytomic will use this bucket as a staging area for intermediate data. Files are removed once jobs complete. The IAM role used to access Redshift must also have read and write permission.

- Redshift must be able to assume the IAM role used to connect.

Create a Redshift role

You can create a role for Polytomic with the following query.

CREATE ROLE polytomic;

Write permissions

Here is a query you can run in Redshift to grant general write permissions (this assumes your database name is my_database and your Redshift user/role is polytomic):

GRANT TEMP ON DATABASE my_database TO polytomic;

GRANT CREATE ON DATABASE my_database TO polytomic;

For those who want finer control, here are the list of permissions Polytomic requires:

TEMPINSERT,DELETE, andUPDATEon any tables that you intend to write into (including future tables, if applicable).TRUNCATEALTERCREATEon tablesCREATEon schemasDROP

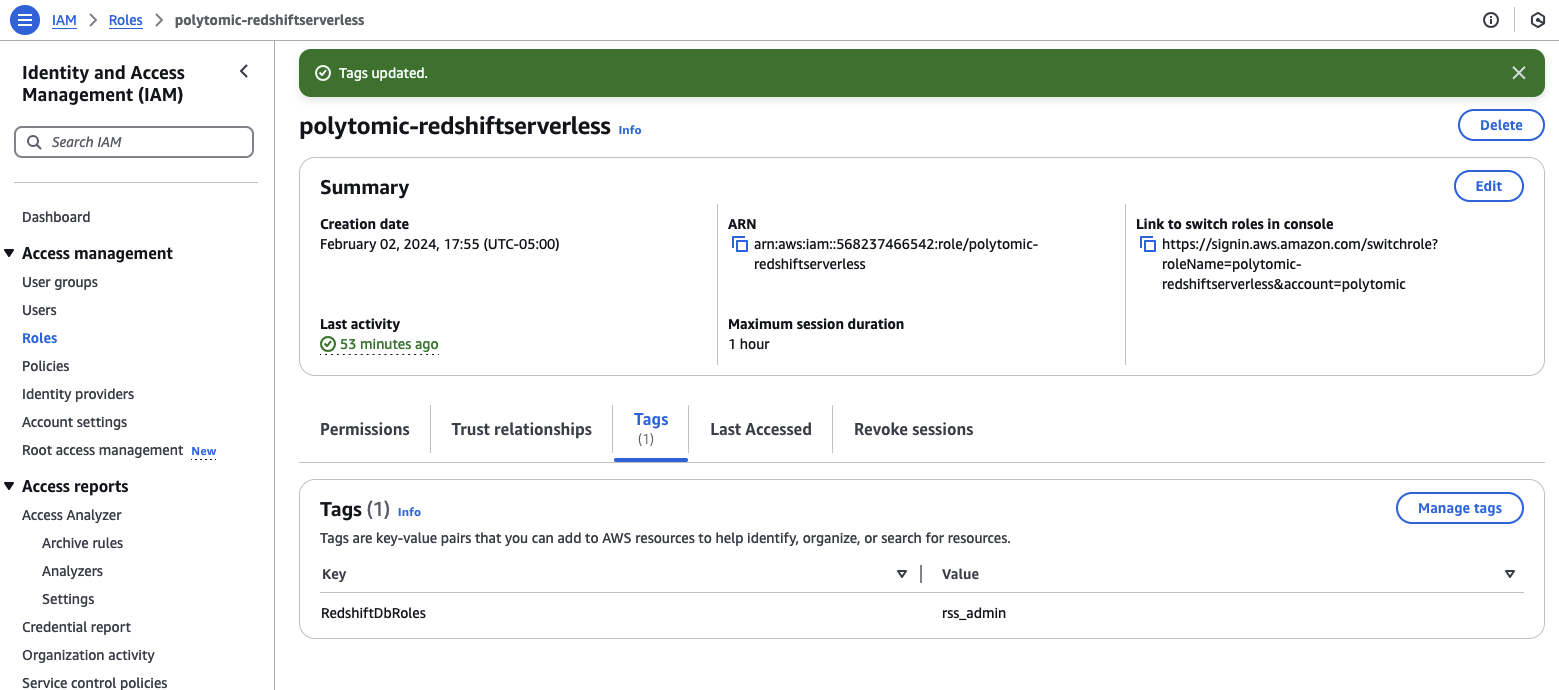

Assign the Redshift role to your IAM role

Tag the IAM role in your AWS console with the Redshift role from the previous step using the RedshiftDbRoles tag. In the image below, the role created in Redshift with write permissions happens to be called rss_admin; you may pick another name. See AWS Documentation for more information on binding Redshift and IAM roles.

S3 bucket

Polytomic will use an S3 bucket to stage data that will be loaded into Redshift; if you are also using Redshift as a source with UNLOAD, the same bucket and permissions will suffice.

The IAM role must have permission to access the S3 bucket.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:GetObjectAttributes",

"s3:ListBucket",

"s3:DeleteObject"

],

"Resource": [

"arn:aws:s3:::unload-example",

"arn:aws:s3:::unload-example/*"

]

}

]

}

Role assumption

Redshift must also have permission to assume the IAM role in order to read the staged data from the bucket. An example of a full trust policy follows.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::568237466542:root"

},

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": {

"sts:ExternalId": "a1efa791-4530-43a0-962d-74e2ccf18309"

}

}

},

{

"Effect": "Allow",

"Principal": {

"Service": "redshift.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

Authenticating with IAM Roles

See Using AWS IAM roles to access S3 buckets for detailed documentation on configuring Polytomic connections with IAM roles.

Updated 7 months ago