Redshift

Source and destination

Syncing from Redshift

To use Redshift as a source, you only need to supply read-access to the database.

-

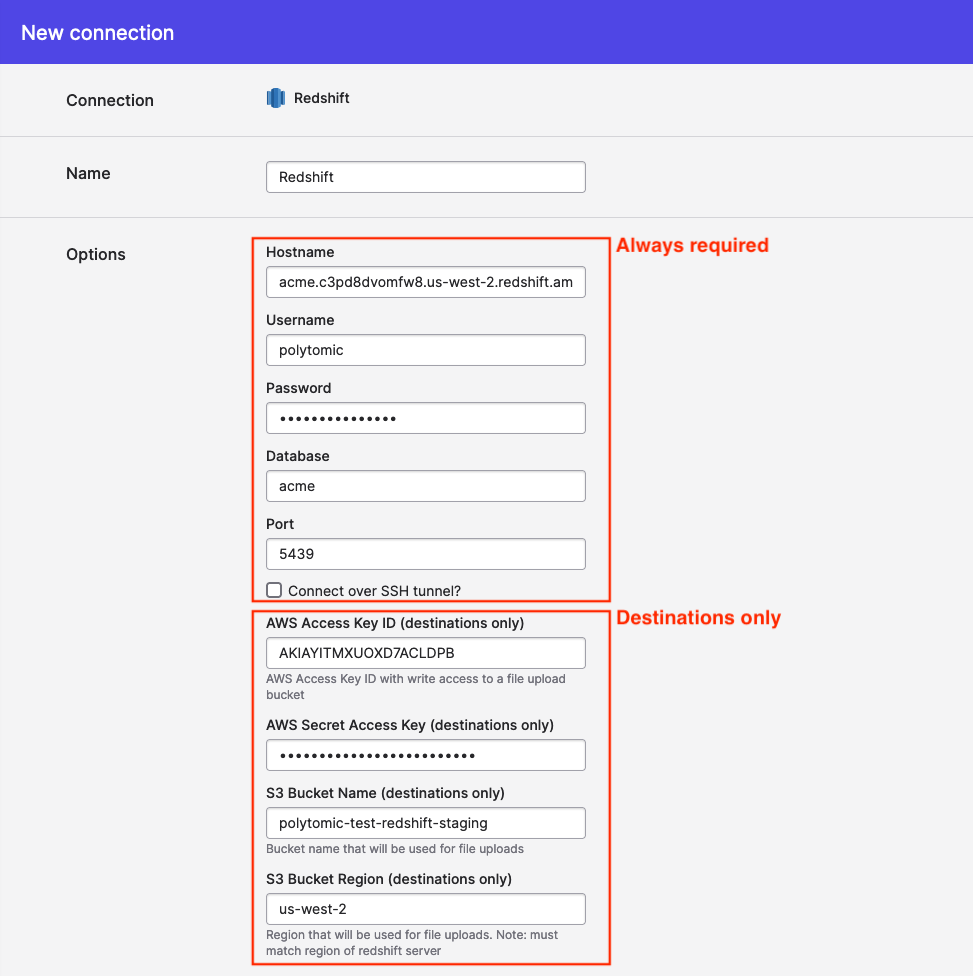

In Polytomic, go to Connections → Add Connection → Redshift.

-

Enter host and credential details

Note: The AWS Access Key/Secret Key and Bucket configuration are only required if you plan to use Redshift as a destination. If you will not be writing to Redshift, you can leave these fields blank.

Read permissions

The basic permissions cover what Polytomic needs to sync from Redshift (assuming your Redshift user is polytomic):

USAGEon any schemas that you expect to use with Polytomic- e.g.

GRANT USAGE ON SCHEMA my_schema TO polytomic;

- e.g.

SELECTon any tables/views that you intend to read from Polytomic- e.g.

GRANT SELECT ON ALL TABLES IN SCHEMA my_schema TO polytomic; - e.g.

GRANT SELECT ON ALL VIEWS IN SCHEMA my_schema TO polytomic;

- e.g.

Syncing to Redshift

If you wish to write to Redshift, there are two requirements:

- A user with write permission to the table, schemas, or database that you want to load data into.

- An S3 bucket in the same region as your Redshift server and an Access Key/Secret Key with read/write access to the bucket. Polytomic will use this bucket as a staging area for intermediate data. Files are removed once jobs complete (we also recommend setting a lifecycle policy on your bucket).

Once you have set up a user and an S3 bucket with an Access Key/Secret Key pair, enter those details in the connection configuration above.

Write permissions

Here is a query you can run in Redshift to grant general write permissions (this assumes your database name is my_database and your Redshift user/role is polytomic):

GRANT TEMP ON DATABASE my_database TO polytomic;

GRANT CREATE ON DATABASE my_database TO polytomic;

For those who want finer control, here are the list of permissions Polytomic requires:

TEMPINSERT,DELETE, andUPDATEon any tables that you intend to write into (including future tables, if applicable).TRUNCATEALTERCREATEon tablesCREATEon schemasDROP

S3

If you are using Redshift as a destination, you must provide an S3 bucket to use. Polytomic will use this bucket as a staging area for intermediate data.

Here's a sample access policy for S3:

{

"Statement": [

{

"Action": [

"s3:*Object",

"s3:ListBucket"

],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::your-bucket-name",

"arn:aws:s3:::your-bucket-name/*"

],

"Sid": "PolytomicS3BucketAccessRedshift"

}

],

"Version": "2012-10-17"

}

Troubleshooting

If Polytomic is unable to connect to Redshift, or times out, check the following.

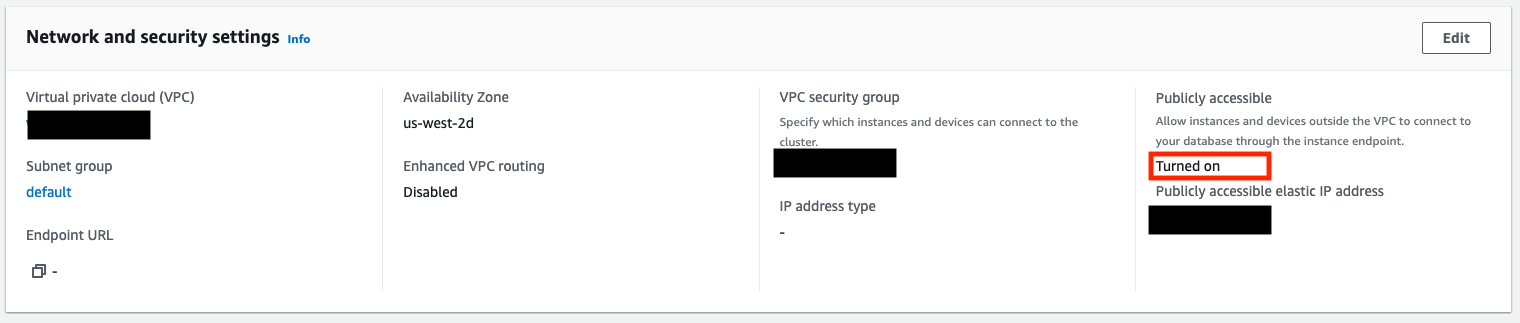

Public accessibility

Under Properties in the Redshift console, confirm that the cluster is publicly accessible.

If you do not wish to make the cluster publicly accessible you will need to use an SSH tunnel to provide access.

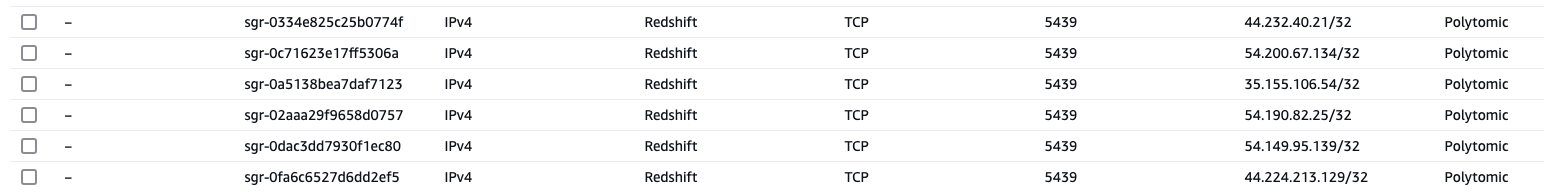

Allow traffic to and from Polytomic

Under Properties in the Redshift console, click on the VPC security group (located under the "Network and security settings" heading). This will take you to the security group, where you can define inbound and outbound security rules for your cluster.

On the Inbound Rules, verify that either all traffic (0.0.0.0/0) is allowed, or that the Polytomic Whitelist IPs are all listed. Note that these will be entered as /32 CIDR ranges.

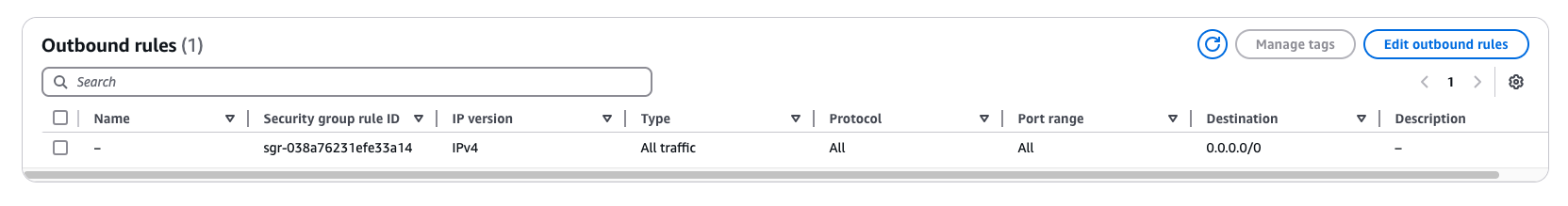

Under the Outbound Rules, most customers allow all outbound traffic (0.0.0.0/0); if your rules are more restrictive, ensure that the Whitelist IPs are again listed as CIDR ranges.

VPC Network ACLs

Network ACLs set for the VPC may also limit traffic to and from your Redshift cluster. For the VPC associated with your Redshift cluster, click on Network ACLs and verify that either 0.0.0.0/0 or the Polytomic Whitelist IPs are allowed for both inbound and outbound traffic.

Connecting via SSH

Polytomic supports connecting to Redshift via SSH. See Connecting via an SSH tunnel for more information.

Updated 8 months ago